Building payment infrastructure on Solana requires understanding how to properly index blockchain data for centralized applications. Whether we’re building a centralized exchange (CEX), crypto gateway, or e-commerce platform, indexing is the foundation that enables us to track user deposits, process withdrawals securely, and maintain accurate balances.

This comprehensive guide covers everything we need to know about Solana indexing, from basic concepts to advanced security considerations for production systems.

What is Indexing?

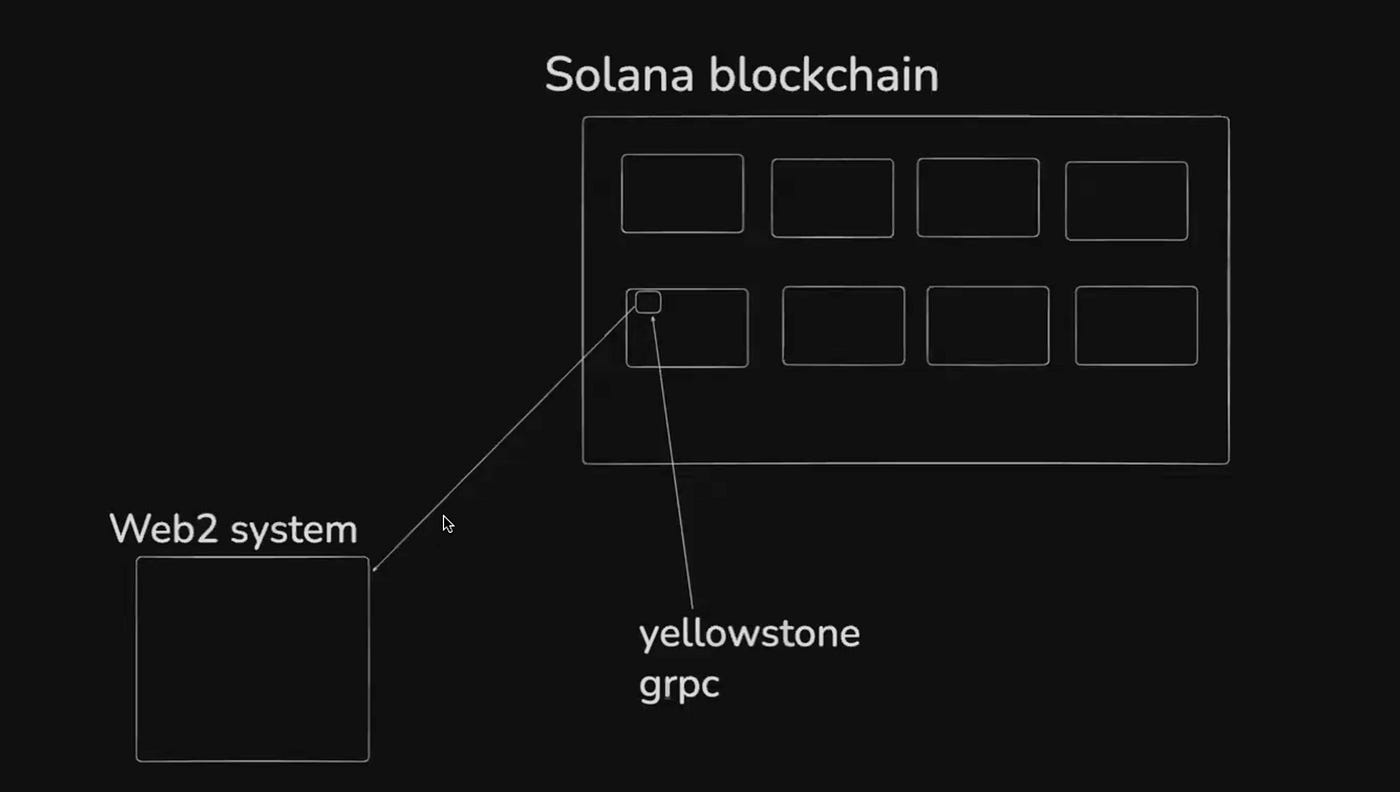

Indexing means copying over the state of a blockchain to a Web2 system. In the context of Solana, it involves extracting specific transaction data from the high-throughput blockchain and storing it in traditional databases like PostgreSQL or MongoDB.

The goal isn’t to copy the entire blockchain — that would be expensive and unnecessary. Instead, we focus on indexing specific addresses or transactions relevant to our application. For example, we might want to track:

All transactions to our exchange’s deposit address

Activity on specific liquidity pools

Transactions involving particular token contracts

User deposits and withdrawals

The Solana blockchain processes hundreds of transactions per second since Genesis, generating terabytes of data. Our indexing system needs to efficiently filter this stream to capture only the transactions we care about.

How to Index: Yellowstone & Geyser

The most robust approach to Solana indexing is using Yellowstone, built on top of the Geyser plugin. These tools allow us to subscribe to real-time blockchain events as they happen.

Understanding the Architecture

When validators on the Solana network receive transactions and create blocks, they can run additional processes (plugins) that extract and forward specific transaction data. Yellowstone provides a gRPC interface to these plugins, enabling us to:

Subscribe to specific accounts or programs

Filter transactions based on our criteria

Receive real-time updates as transactions are confirmed

Available RPC Providers

Several providers offer Yellowstone services:

Triton: Created Yellowstone, offers premium indexing services

Helios: Competitor with their own indexing tools

Quicknode: Provides RPC and indexing infrastructure

Alchemy: Web3 infrastructure provider

Pricing and Access

Free Yellowstone servers exist (like parafi.tech) but with limitations—typically only allowing subscriptions to predefined accounts like the vote program. For production use, expect to pay:

Shift.io: ~$200/month for one connection

Titan: ~$1000/month for premium access

Custom validators: Exchanges often run their own validators with Geyser plugins

The Problem with Naive Payment Flows

Before diving into proper indexing solutions, let’s understand why simple payment flows fail. Consider a basic e-commerce site selling t-shirts for 0.5 SOL:

Broken Flow:

User sends 0.5 SOL to our address

User’s client gets the transaction signature

Client forwards the signature to our backend

Backend verifies the signature and ships the product

Critical Problems:

1. Lack of Atomicity (Client Crash) If the user’s client crashes after step 1 but before step 3, the money is sent, but our backend never knows. The user loses their SOL with no product shipped.

2. Transaction Sniping (Impersonation) An attacker can monitor our payment address, grab any transaction signature, and claim it with their own session cookie before the real user can. Our backend has no way to verify who actually sent the payment.

The Solution: Memo Program + Indexing

Solana’s Memo Program (MemoSq4gqABAXKb96qnH8TysNcWxMyWCqXgDLGmfcHr) provides the solution. This program logs arbitrary strings on-chain, allowing us to attach identifiers to transactions.

Secure Architecture:

Frontend requests to make a purchase

Web2 Backend generates a unique identifier (random number or user ID) and returns it

Frontend constructs a transaction with TWO instructions:

system_program::transferto send SOL to our addressmemo_programcall with the unique identifierUser signs and sends this atomic transaction

Indexer (Yellowstone client) sees the transaction and parses the memo

The processor uses the identifier to credit the correct user

Implementation Example:

import {

Connection,

Keypair,

LAMPORTS_PER_SOL,

SystemProgram,

Transaction,

sendAndConfirmTransaction

} from "@solana/web3.js";

import { createMemoInstruction } from "@solana/spl-memo";

// Connect to Solana cluster

const connection = new Connection("http://localhost:8899", "confirmed");

// Create keypair for the fee payer

const feePayer = Keypair.generate();

// Request airdrop

const airdropSignature = await connection.requestAirdrop(

feePayer.publicKey,

LAMPORTS_PER_SOL

);

// Confirm airdrop

const { blockhash, lastValidBlockHeight } =

await connection.getLatestBlockhash();

await connection.confirmTransaction({

signature: airdropSignature,

blockhash,

lastValidBlockHeight

});

// Create transaction with transfer and memo

const memoInstruction = createMemoInstruction("Hello, World!");

const sendSolana = SystemProgram.transfer({

fromPubkey: feePayer.publicKey,

toPubkey: Keypair.generate().publicKey,

lamports: 0.5 * 1000_000_000

});

const transaction = new Transaction()

.add(sendSolana)

.add(memoInstruction);

// Sign and send

const transactionSignature = await sendAndConfirmTransaction(

connection,

transaction,

[feePayer]

);

console.log("Transaction Signature: ", transactionSignature);Why This Works:

Solves Atomicity: The indexer runs independently and will always see confirmed transactions

Prevents Sniping: Payment and user identifier are locked together in one atomic transaction

Cost-Effective: We can use simple RPC polling instead of expensive Yellowstone for single-address indexing

Required Memo Extension for Token Accounts

For enhanced security with token transfers, Solana offers the Required Memo extension. This ensures all incoming token transfers must include a memo.

Creating Token Account with Required Memo:

import {

TOKEN_2022_PROGRAM_ID,

getAccountLen,

ExtensionType,

createInitializeAccountInstruction,

createEnableRequiredMemoTransfersInstruction,

} from "@solana/spl-token";

export async function createTokenWithMemoExtension(

connection: Connection,

payer: Keypair,

tokenAccountKeypair: Keypair,

mint: PublicKey,

): Promise<string> {

// Create account instruction

const accountLen = getAccountLen([ExtensionType.MemoTransfer]);

const lamports = await connection.getMinimumBalanceForRentExemption(accountLen);

const createAccountInstruction = SystemProgram.createAccount({

fromPubkey: payer.publicKey,

newAccountPubkey: tokenAccountKeypair.publicKey,

space: accountLen,

lamports,

programId: TOKEN_2022_PROGRAM_ID,

});

// Initialize account instruction

const initializeAccountInstruction = createInitializeAccountInstruction(

tokenAccountKeypair.publicKey,

mint,

payer.publicKey,

TOKEN_2022_PROGRAM_ID,

);

// Enable required memo transfers instruction

const enableRequiredMemoTransfersInstruction =

createEnableRequiredMemoTransfersInstruction(

tokenAccountKeypair.publicKey,

payer.publicKey,

undefined,

TOKEN_2022_PROGRAM_ID,

);

// Send transaction

const transaction = new Transaction().add(

createAccountInstruction,

initializeAccountInstruction,

enableRequiredMemoTransfersInstruction,

);

return await sendAndConfirmTransaction(

connection,

transaction,

[payer, tokenAccountKeypair],

);

}Transferring to Required Memo Account:

const message = "Hello, Solana";

const transaction = new Transaction().add(

new TransactionInstruction({

keys: [{ pubkey: payer.publicKey, isSigner: true, isWritable: true }],

data: Buffer.from(message, "utf-8"),

programId: new PublicKey("MemoSq4gqABAXKb96qnH8TysNcWxMyWCqXgDLGmfcHr"),

}),

createTransferInstruction(

sourceAccount,

destinationAccount,

payer.publicKey,

transferAmount,

undefined,

TOKEN_2022_PROGRAM_ID,

),

);

await sendAndConfirmTransaction(connection, transaction, [payer]);const message = "Hello, Solana";

const transaction = new Transaction().add(

new TransactionInstruction({

keys: [{ pubkey: payer.publicKey, isSigner: true, isWritable: true }],

data: Buffer.from(message, "utf-8"),

programId: new PublicKey("MemoSq4gqABAXKb96qnH8TysNcWxMyWCqXgDLGmfcHr"),

}),

createTransferInstruction(

sourceAccount,

destinationAccount,

payer.publicKey,

transferAmount,

undefined,

TOKEN_2022_PROGRAM_ID,

),

);

await sendAndConfirmTransaction(connection, transaction, [payer]);Deposit Architecture Patterns

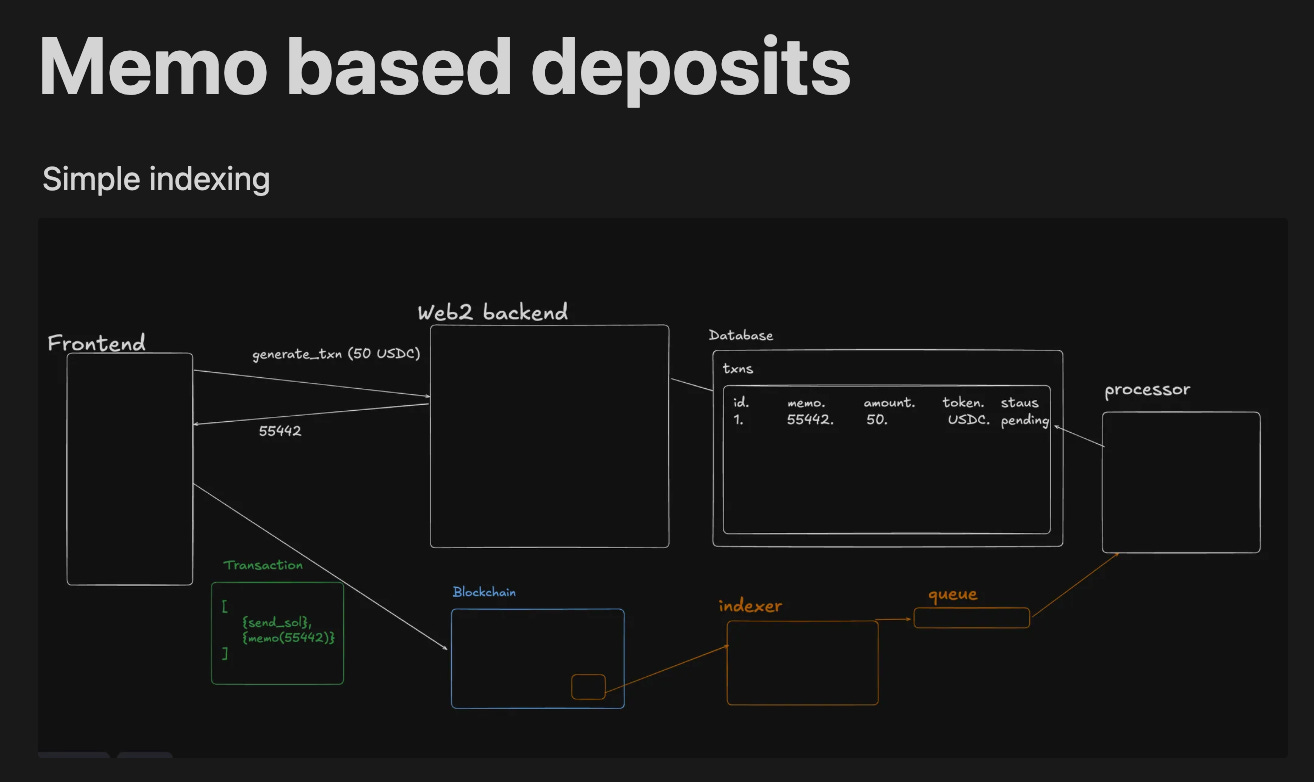

Pattern 1: Memo-Based Deposits (Recommended)

All users send funds to a single address with unique memo identifiers:

Advantages:

Simple to implement and maintain

Cost-effective indexing (can use simple RPC polling)

No private key management complexity

Perfect for most applications

Process:

User requests a deposit

Backend generates a unique seed/nonce, stores it in the database as ‘pending’

Frontend creates a transaction with transfer + memo containing the seed

Indexer monitors a single address and processes transactions

Processor matches memo to database record and updates user balance

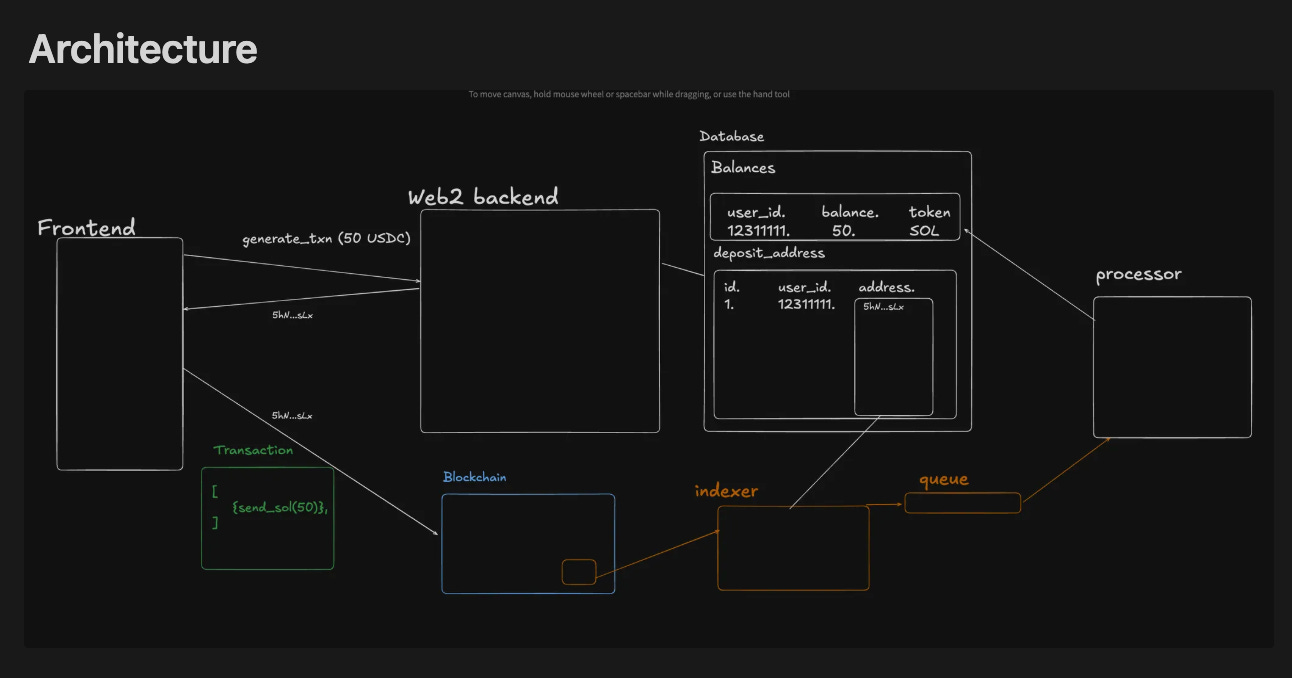

Pattern 2: Unique Deposit Addresses (Binance-Style)

Every user gets their own unique deposit address:

Advantages:

Familiar to users from traditional exchanges

Clear separation of user funds

Easy to track individual user activity

Disadvantages:

Complex private key management for millions of addresses

Requires fund consolidation (“sweeper”) process

Higher gas costs for moving funds between addresses

More complex indexing (must monitor many addresses)

The Sweeper Problem

When using unique deposit addresses, funds get scattered across thousands of wallets. A sweeper process must periodically consolidate these funds:

Monitor all user deposit addresses for incoming funds

Calculate gas fees needed to move funds from each address

Send SOL to addresses that need gas for token transfers

Sweep funds from deposit addresses to the main treasury

Timing sweeps during low gas periods for cost efficiency

This complexity makes memo-based deposits preferable for most applications.

Withdrawal Architecture and Security

Withdrawals are the most critical and dangerous operations in any centralized crypto platform. Once funds leave our control, they cannot be recovered, making security paramount.

The Withdrawal Problem

When a user wants to withdraw 100 SOL from their 400 SOL balance:

The database shows that 400 SOL belongs to the user

The actual SOL sits in our centralized “hot wallet”

We must atomically reduce the user’s database balance AND send a blockchain transaction

Any failure in this process can result in lost funds or double-spending

Naive Withdrawal Flow (DANGEROUS):

app.post('/withdraw', (req, res) => {

// 1. Check user's balance (400 SOL)

// 2. REDUCE balance in DB (set to 300 SOL)

db.balances.update({ userId: 1, currency: 'SOL' }, { $inc: -100 });

// 3. Get MPCs to sign withdrawal transaction

const signature = MPC1.sign() + MPC2.sign() + MPC3.sign();

// 4. Send transaction to blockchain

connection.sendTransaction(signature);

});Critical Problems:

1. Database Before Blockchain

If we reduce the balance first, but the blockchain transaction fails (network issues, old blockhash), the user loses their funds in the database, but the money never leaves our wallet.

2. Blockchain Before Database

If the blockchain transaction succeeds but the database update fails (DB crash), the user receives their 100 SOL but still has 400 SOL in their account — they can withdraw again (double-spend).

3. Race Conditions

Multiple simultaneous withdrawal requests could all check the same balance, all see sufficient funds, and all proceed — allowing withdrawal of more funds than available.

Robust Withdrawal Implementation

Queue-Based Processing

The solution is using a message queue system (like Kafka) for sequential processing:

API Endpoint validates the request and places it in the withdrawal queue

Single Worker process pulls requests one by one:

Checks and locks the user’s balance

Creates and signs a transaction

Sends transaction to blockchain

Updates the database on confirmation

Acknowledges the queue message

Idempotent Withdrawals

To handle worker crashes, we must make withdrawals idempotent (safe to retry):

Critical Insight: Store signature BEFORE broadcasting

async function processWithdrawal(withdrawalRequest) {

// 1. Create withdrawal transaction

const transaction = createWithdrawalTx(withdrawalRequest);

// 2. Get MPC signatures

const signature = await getMPCSignatures(transaction);

// 3. STORE signature in database BEFORE broadcasting

await db.withdrawals.update(withdrawalRequest.id, {

signature: signature,

status: 'signed'

});

// 4. NOW broadcast to blockchain

await connection.sendTransaction(signature);

// 5. Wait for confirmation and update status

await confirmTransaction(signature);

await db.withdrawals.update(withdrawalRequest.id, { status: 'completed' });

await updateUserBalance(withdrawalRequest);

}If the worker crashes after storing the signature, a new worker can:

Check if the signature exists in the database

Query the blockchain to see if the transaction was confirmed

If confirmed, just update the database status

If not confirmed: safely create a new transaction

Advanced Security: Defending Against Insider Threats

Attack Vector 1: Stolen JWT Secrets

Threat: Malicious insider or hacker obtains JWT secret key, can forge user cookies, and call the withdrawal API.

Mitigation: Multi-Factor Authentication (MFA)

Withdrawal API requires a valid OTP sent to the user’s email/phone

Even with stolen cookies, the attacker needs access to the user’s communication channels

Attack Vector 2: Internal System Bypass

Threat: Malicious developer with server access bypasses public API entirely, directly calls internal MPC functions.

Mitigation: Cryptographic Handshake Between Browser and MPCs

Key Generation: Upon login, the user’s browser generates an ephemeral public/private key pair

Key Registration: Using MFA, the browser registers the public key directly with each MPC server

Request Signing: For withdrawals, the browser signs the request with the private key

Verification: MPCs verify the signature against the registered public key before signing the blockchain transaction

This ensures that even with full backend access, attackers cannot forge withdrawal requests without the user’s private key (which never leaves the browser).

Implementation Example:

// Browser-side: Generate and register keys

const keyPair = await crypto.subtle.generateKey(

{

name: "ECDSA",

namedCurve: "P-256",

},

true,

["sign", "verify"]

);

// Register public key with MPCs (after MFA verification)

const publicKeyJWK = await crypto.subtle.exportKey("jwk", keyPair.publicKey);

await registerKeyWithMPCs(publicKeyJWK, otpToken);

// Sign withdrawal request

const withdrawalRequest = { amount: 100, address: "...", userId: 12345 };

const signature = await crypto.subtle.sign(

{ name: "ECDSA", hash: "SHA-256" },

keyPair.privateKey,

new TextEncoder().encode(JSON.stringify(withdrawalRequest))

);

// Send signed request to backend

await fetch('/withdraw', {

method: 'POST',

body: JSON.stringify({ request: withdrawalRequest, signature: signature })

});Multi-Party Computation (MPC) for Fund Security

Modern exchanges secure their hot wallets using MPC clusters instead of single private keys:

MPC Architecture:

3–5 MPC nodes each hold a “share” of the private key

Threshold signing requires a majority (e.g., 3 of 5) to sign transactions

No single point of failure — compromising one node doesn’t expose funds

Geographic distribution of nodes for additional security

MPC Workflow:

Withdrawal request validated and placed in the queue

Worker process creates an unsigned transaction

Transaction sent to the MPC cluster for signing

Each MPC node validates the request and provides a signature share

Shares combined to create the final transaction signature

Signed transaction broadcast to the blockchain

Proof of Reserves: Building Trust

Leading exchanges are implementing Proof of Reserves to demonstrate they hold sufficient funds to cover user balances. As highlighted by Backpack’s recent announcement, this involves:

Core Components:

Daily Balance Attestations: Cryptographic proofs of all user balances

Merkle Tree Inclusion Proofs: Users can verify their balance is included in the total

External Audits: Third-party verification of reserve calculations

Real-time Reconciliation: Continuous monitoring of asset vs. liability balance

Implementation Considerations:

Physical Balances Only: Include assets that can be “touched” on-chain (available balances, open orders, unborrowed lends)

Margin Risk Management: Over-collateralized borrows must be factored into reserve calculations

Fiat Complications: Traditional bank deposits require special handling (deposit tokens)

Perpetual Futures: Real-time P&L settlement automatically included in reserves

Cost Optimization Strategies

Cheap Indexing for a Single Address

For applications using memo-based deposits, we can avoid expensive Yellowstone subscriptions:

// Simple polling indexer

async function pollForTransactions() {

const signatures = await connection.getSignaturesForAddress(

ourDepositAddress,

{ limit: 50 }

);

for (const sig of signatures) {

if (!processedSignatures.has(sig.signature)) {

const transaction = await connection.getTransaction(sig.signature);

processMemoTransaction(transaction);

processedSignatures.add(sig.signature);

}

}

}

// Run every 20 seconds

setInterval(pollForTransactions, 20000);This approach costs nearly nothing compared to $200–1000/month Yellowstone subscriptions.

When to Use Expensive Indexing

Reserve Yellowstone/Geyser for scenarios requiring:

Multiple address monitoring (unique deposit addresses)

Real-time processing (high-frequency trading, liquidations)

Program-wide monitoring (DeFi protocol indexing)

Cross-chain arbitrage (sub-second transaction processing)

Production Deployment Checklist

Infrastructure Requirements:

Message queue system (Kafka/Redis) for withdrawal processing

Database with proper indexing on user_id, transaction signatures

MPC cluster for secure transaction signing

Monitoring and alerting for all critical processes

Geographic redundancy for indexing infrastructure

Security Measures:

MFA is required for all withdrawal operations

Browser-MPC cryptographic handshake implemented

Regular security audits and penetration testing

Incident response procedures documented

Key rotation procedures for MPC nodes

Operational Procedures:

Daily proof of reserves generation

User balance reconciliation monitoring

Withdrawal queue monitoring and alerting

Regular sweeper execution (if using unique addresses)

Gas fee optimization for blockchain operations

Conclusion

Building a robust indexing infrastructure on Solana requires careful consideration of security, cost, and operational complexity. The memo-based deposit approach provides an excellent balance of simplicity and security for most applications, while proper withdrawal architecture with MPC security and queue-based processing ensures funds remain safe.

Key takeaways for implementation:

Use memo-based deposits unless we have specific requirements for unique addresses

Implement proper withdrawal queues with idempotent processing

Secure hot wallets with MPC clusters and cryptographic handshakes

Consider proof of reserves for building user trust

Optimize costs by avoiding expensive indexing when simple polling suffices

The landscape of centralized crypto infrastructure continues evolving toward more transparent, verifiable, and secure systems. By implementing these patterns correctly, we can build applications that provide the user experience of centralized systems with the security guarantees that approach decentralized protocols.

Written with ❤ by Prapti for the Solana community.